In this post, we'll use HDFS command 'bin\hdfs dfs' with different options like mkdir, copyFromLocal, cat, ls and finally run the wordcount MapReduce job provided in %HADOOP_HOME%\share\hadoop\mapreduce\hadoop-mapreduce-examples-2.2.0.jar. On successful execution of the job in the Single Node (pseudo-distributed mode) cluster, an output (contains counts of the occurrences of each word) will be generated.

Tools and Technologies used in this article

1. Install Apache Hadoop 2.2.0 in Microsoft Windows OS

If Apache Hadoop 2.2.0 is not already installed then follow the post Build, Install, Configure and Run Apache Hadoop 2.2.0 in Microsoft Windows OS.

2. Start HDFS (Namenode and Datanode) and YARN (Resource Manager and Node Manager)

Run following commands.

Command Prompt

C:\Users\abhijitg>cd c:\hadoop

c:\hadoop>sbin\start-dfs

c:\hadoop>sbin\start-yarn

starting yarn daemons

Namenode, Datanode, Resource Manager and Node Manager will be started in few minutes and ready to execute Hadoop MapReduce job in the Single Node (pseudo-distributed mode) cluster.

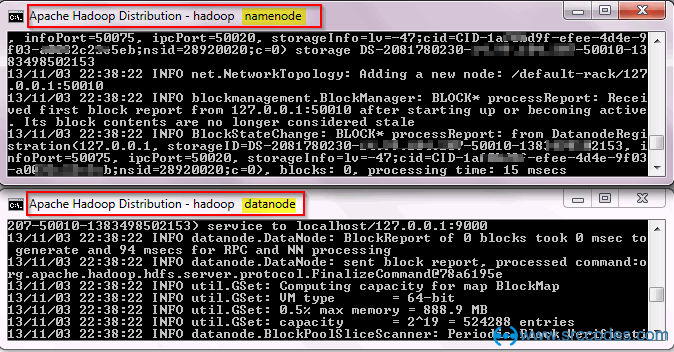

Namenode & Datanode :

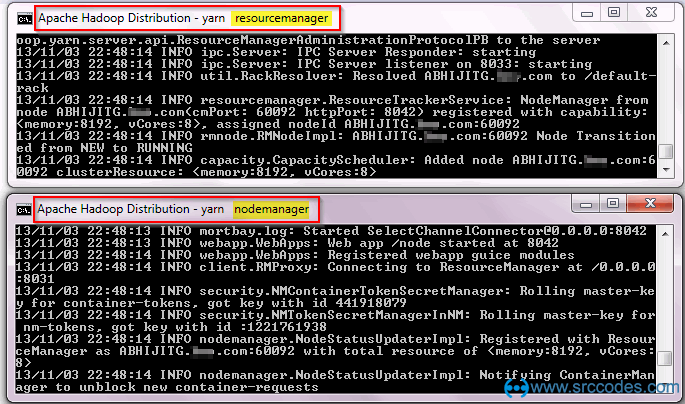

Resource Manager & Node Manager :

3. Run wordcount MapReduce job

Now we'll run wordcount MapReduce job available in %HADOOP_HOME%\share\hadoop\mapreduce\hadoop-mapreduce-examples-2.2.0.jar

- Create a text file with some content. We'll pass this file as input to the wordcount MapReduce job for counting words.

C:\file1.txtInstall Hadoop Run Hadoop Wordcount Mapreduce Example - Create a directory (say 'input') in HDFS to keep all the text files (say 'file1.txt') to be used for counting words.

C:\Users\abhijitg>cd c:\hadoop C:\hadoop>bin\hdfs dfs -mkdir input - Copy the text file(say 'file1.txt') from local disk to the newly created 'input' directory in HDFS.

C:\hadoop>bin\hdfs dfs -copyFromLocal c:/file1.txt input - Check content of the copied file.

C:\hadoop>hdfs dfs -ls input Found 1 items -rw-r--r-- 1 ABHIJITG supergroup 55 2014-02-03 13:19 input/file1.txt C:\hadoop>bin\hdfs dfs -cat input/file1.txt Install Hadoop Run Hadoop Wordcount Mapreduce Example - Run the wordcount MapReduce job provided in %HADOOP_HOME%\share\hadoop\mapreduce\hadoop-mapreduce-examples-2.2.0.jar

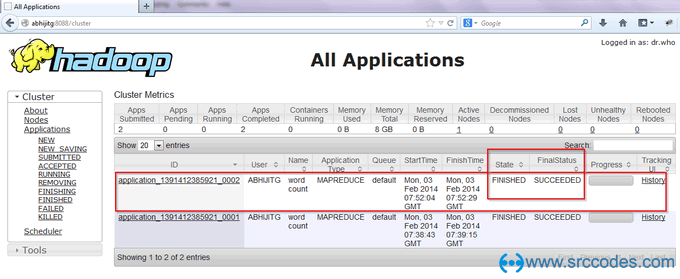

C:\hadoop>bin\yarn jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.2.0.jar wordcount input output 14/02/03 13:22:02 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032 14/02/03 13:22:03 INFO input.FileInputFormat: Total input paths to process : 1 14/02/03 13:22:03 INFO mapreduce.JobSubmitter: number of splits:1 : : 14/02/03 13:22:04 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1391412385921_0002 14/02/03 13:22:04 INFO impl.YarnClientImpl: Submitted application application_1391412385921_0002 to ResourceManager at /0.0.0.0:8032 14/02/03 13:22:04 INFO mapreduce.Job: The url to track the job: http://ABHIJITG:8088/proxy/application_1391412385921_0002/ 14/02/03 13:22:04 INFO mapreduce.Job: Running job: job_1391412385921_0002 14/02/03 13:22:14 INFO mapreduce.Job: Job job_1391412385921_0002 running in uber mode : false 14/02/03 13:22:14 INFO mapreduce.Job: map 0% reduce 0% 14/02/03 13:22:22 INFO mapreduce.Job: map 100% reduce 0% 14/02/03 13:22:30 INFO mapreduce.Job: map 100% reduce 100% 14/02/03 13:22:30 INFO mapreduce.Job: Job job_1391412385921_0002 completed successfully 14/02/03 13:22:31 INFO mapreduce.Job: Counters: 43 File System Counters FILE: Number of bytes read=89 FILE: Number of bytes written=160142 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=171 HDFS: Number of bytes written=59 HDFS: Number of read operations=6 HDFS: Number of large read operations=0 HDFS: Number of write operations=2 Job Counters Launched map tasks=1 Launched reduce tasks=1 Data-local map tasks=1 Total time spent by all maps in occupied slots (ms)=5657 Total time spent by all reduces in occupied slots (ms)=6128 Map-Reduce Framework Map input records=2 Map output records=7 Map output bytes=82 Map output materialized bytes=89 Input split bytes=116 Combine input records=7 Combine output records=6 Reduce input groups=6 Reduce shuffle bytes=89 Reduce input records=6 Reduce output records=6 Spilled Records=12 Shuffled Maps =1 Failed Shuffles=0 Merged Map outputs=1 GC time elapsed (ms)=145 CPU time spent (ms)=1418 Physical memory (bytes) snapshot=368246784 Virtual memory (bytes) snapshot=513716224 Total committed heap usage (bytes)=307757056 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=55 File Output Format Counters Bytes Written=59 - Check output.

http://abhijitg:8088/clusterC:\hadoop>bin\hdfs dfs -cat output/* Example 1 Hadoop 2 Install 1 Mapreduce 1 Run 1 Wordcount 1

Comments