If you are getting "Exception from container-launch:org.apache.hadoop.util.Shell$ExitCodeException" in FAILED application's Diagnostics (or Command prompt) and "java.lang.NoClassDefFoundError: org/apache/hadoop/service/CompositeService" in 'stderr' containerlogs while running any Hadoop example on Windows, then add all the required Hadoop jars to the property 'yarn.application.classpath' in yarn-site.xml configuration file.

Tools and Technologies used in this article :

Exception

Command Prompt

C:\hadoop>bin\yarn jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.2.0.jar pi 5 50

Number of Maps = 5

Samples per Map = 50

Wrote input for Map #0

Wrote input for Map #1

Wrote input for Map #2

Wrote input for Map #3

Wrote input for Map #4

Starting Job

14/02/27 14:53:04 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

14/02/27 14:53:04 INFO input.FileInputFormat: Total input paths to process : 5

14/02/27 14:53:04 INFO mapreduce.JobSubmitter: number of splits:5

:

:

:

14/02/27 14:53:05 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1393491185191_0001

14/02/27 14:53:06 INFO impl.YarnClientImpl: Submitted application application_1393491185191_0001 to ResourceManager at /0.0.0.0:8032

14/02/27 14:53:06 INFO mapreduce.Job: The url to track the job: http://ABHIJITG:8088/proxy/application_1393491185191_0001/

14/02/27 14:53:06 INFO mapreduce.Job: Running job: job_1393491185191_0001

14/02/27 14:53:15 INFO mapreduce.Job: Job job_1393491185191_0001 running in uber mode : false

14/02/27 14:53:20 INFO mapreduce.Job: map 0% reduce 0%

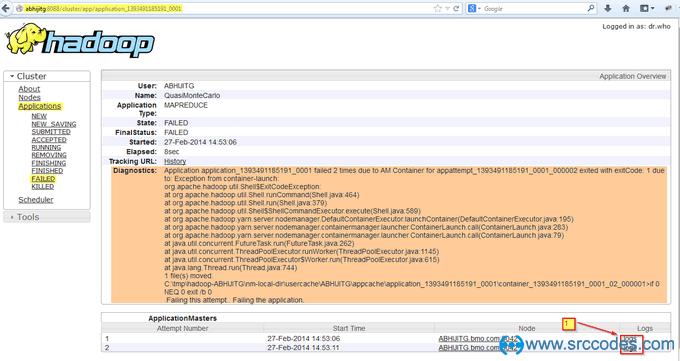

14/02/27 14:53:20 INFO mapreduce.Job: Job job_1393491185191_0001 failed with state FAILED due to: Application application_1393491185191_0001 failed 2 times due to AM Container for appattempt_1393491185191_0001_000002 exited with exitCode: 1 due to: Exception from container-launch:

org.apache.hadoop.util.Shell$ExitCodeException:

at org.apache.hadoop.util.Shell.runCommand(Shell.java:464)

at org.apache.hadoop.util.Shell.run(Shell.java:379)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:589)

at org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor.launchContainer(DefaultContainerExecutor.java:195)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:283)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:79)

at java.util.concurrent.FutureTask.run(FutureTask.java:262)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

at java.lang.Thread.run(Thread.java:744)

1 file(s) moved.

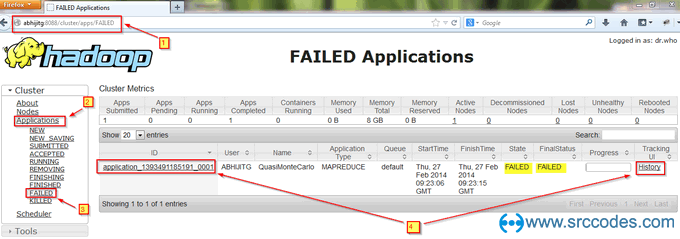

FAILED Applications - http://abhijitg:8088/cluster/apps/FAILED

Diagnostics of FAILED application - http://abhijitg:8088/cluster/app/application\_1393491185191\_0001

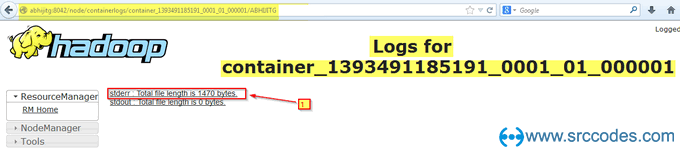

containerlogs - http://abhijitg:8042/node/containerlogs/container\_1393491185191\_0001\_01\_000001/ABHIJITG/

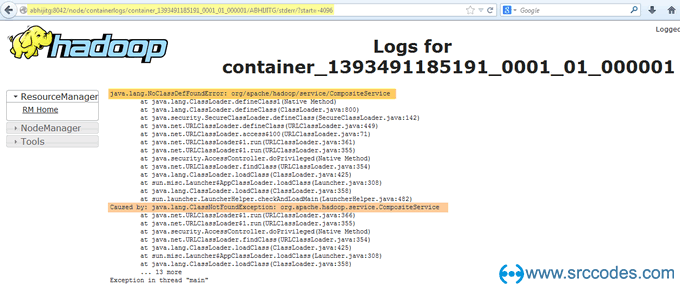

'stderr' containerlogs - http://abhijitg:8042/node/containerlogs/container\_1393491185191\_0001\_01\_000001/ABHIJITG/stderr/?start=-4096

%HADOOP_HOME%\logs\userlogs\application_1393491185191_0001\container_1393491185191_0001_01_000001\stderr

java.lang.NoClassDefFoundError: org/apache/hadoop/service/CompositeService

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:800)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:449)

at java.net.URLClassLoader.access$100(URLClassLoader.java:71)

at java.net.URLClassLoader$1.run(URLClassLoader.java:361)

at java.net.URLClassLoader$1.run(URLClassLoader.java:355)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:354)

at java.lang.ClassLoader.loadClass(ClassLoader.java:425)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308)

at java.lang.ClassLoader.loadClass(ClassLoader.java:358)

at sun.launcher.LauncherHelper.checkAndLoadMain(LauncherHelper.java:482)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.service.CompositeService

at java.net.URLClassLoader$1.run(URLClassLoader.java:366)

at java.net.URLClassLoader$1.run(URLClassLoader.java:355)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:354)

at java.lang.ClassLoader.loadClass(ClassLoader.java:425)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308)

at java.lang.ClassLoader.loadClass(ClassLoader.java:358)

... 13 more

Exception in thread "main"

Fix

Add all the required Hadoop jars to the property 'yarn.application.classpath' in yarn-site.xml configuration file.

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.application.classpath</name>

<value>

%HADOOP_HOME%\etc\hadoop,

%HADOOP_HOME%\share\hadoop\common\*,

%HADOOP_HOME%\share\hadoop\common\lib\*,

%HADOOP_HOME%\share\hadoop\hdfs\*,

%HADOOP_HOME%\share\hadoop\hdfs\lib\*,

%HADOOP_HOME%\share\hadoop\mapreduce\*,

%HADOOP_HOME%\share\hadoop\mapreduce\lib\*,

%HADOOP_HOME%\share\hadoop\yarn\*,

%HADOOP_HOME%\share\hadoop\yarn\lib\*

</value>

</property>

</configuration>

Note :

If HADOOP_COMMON_HOME, HADOOP_CONF_DIR, HADOOP_HDFS_HOME and HADOOP_YARN_HOME are different in your machine, then modify the above 'yarn.application.classpath' entries accordingly. In my case, all are same (%HADOOP_HOME%);

Note :

Default CLASSPATH entries use Linux format for environment variables (eg. $HADOOP_HOME). Probably, that is why Yarn application can not find the required Hadoop classes in the classpath in Windows environment. We need to add hadoop jars using Windows format for PATH variable (eg. %HADOOP_HOME%).

yarn.application.classpath

CLASSPATH for YARN applications. A comma-separated list of CLASSPATH entries.

Value: $HADOOP_CONF_DIR, $HADOOP_COMMON_HOME/share/hadoop/common/*, $HADOOP_COMMON_HOME/share/hadoop/common/lib/*, $HADOOP_HDFS_HOME/share/hadoop/hdfs/*, $HADOOP_HDFS_HOME/share/hadoop/hdfs/lib/*, $HADOOP_YARN_HOME/share/hadoop/yarn/*, $HADOOP_YARN_HOME/share/hadoop/yarn/lib/*

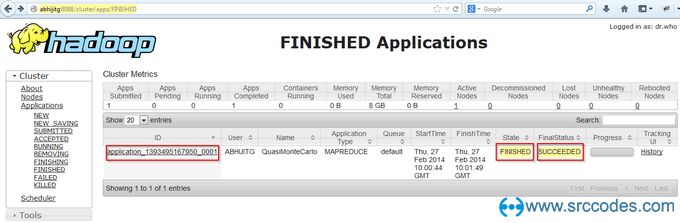

Verify

Re-run the example to verify the fix.

Command Prompt

C:\hadoop>bin\yarn jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.2.0.jar pi 5 50

Number of Maps = 5

Samples per Map = 50

Wrote input for Map #0

Wrote input for Map #1

Wrote input for Map #2

Wrote input for Map #3

Wrote input for Map #4

Starting Job

14/02/27 15:30:42 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

14/02/27 15:30:43 INFO input.FileInputFormat: Total input paths to process : 5

14/02/27 15:30:44 INFO mapreduce.JobSubmitter: number of splits:5

:

:

:

14/02/27 15:30:44 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1393495167950_0001

14/02/27 15:30:44 INFO impl.YarnClientImpl: Submitted application application_1393495167950_0001 to ResourceManager at /0.0.0.0:8032

14/02/27 15:30:44 INFO mapreduce.Job: The url to track the job: http://ABHIJITG:8088/proxy/application_1393495167950_0001/

14/02/27 15:30:44 INFO mapreduce.Job: Running job: job_1393495167950_0001

14/02/27 15:31:01 INFO mapreduce.Job: Job job_1393495167950_0001 running in uber mode : false

14/02/27 15:31:01 INFO mapreduce.Job: map 0% reduce 0%

14/02/27 15:31:24 INFO mapreduce.Job: map 40% reduce 0%

14/02/27 15:31:25 INFO mapreduce.Job: map 80% reduce 0%

14/02/27 15:31:27 INFO mapreduce.Job: map 100% reduce 0%

14/02/27 15:31:49 INFO mapreduce.Job: map 100% reduce 100%

14/02/27 15:31:50 INFO mapreduce.Job: Job job_1393495167950_0001 completed successfully

14/02/27 15:31:51 INFO mapreduce.Job: Counters: 43

File System Counters

FILE: Number of bytes read=116

FILE: Number of bytes written=482348

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=1335

HDFS: Number of bytes written=215

HDFS: Number of read operations=23

HDFS: Number of large read operations=0

HDFS: Number of write operations=3

Job Counters

Launched map tasks=5

Launched reduce tasks=1

Data-local map tasks=5

Total time spent by all maps in occupied slots (ms)=109868

Total time spent by all reduces in occupied slots (ms)=22635

Map-Reduce Framework

Map input records=5

Map output records=10

Map output bytes=90

Map output materialized bytes=140

Input split bytes=745

Combine input records=0

Combine output records=0

Reduce input groups=2

Reduce shuffle bytes=140

Reduce input records=10

Reduce output records=0

Spilled Records=20

Shuffled Maps =5

Failed Shuffles=0

Merged Map outputs=5

GC time elapsed (ms)=1221

CPU time spent (ms)=4410

Physical memory (bytes) snapshot=1237839872

Virtual memory (bytes) snapshot=1675640832

Total committed heap usage (bytes)=1123549184

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=590

File Output Format Counters

Bytes Written=97

Job Finished in 68.624 seconds

Estimated value of Pi is 3.16800000000000000000

Note: Estimated value of Pi will be more accurate for larger 'Number of Maps' and 'Samples per Map' inputs.

Finished Applications - http://abhijitg:8088/cluster/apps/FINISHED

References

- Run Hadoop wordcount MapReduce Example on Windows

- Build, Install, Configure and Run Apache Hadoop 2.2.0 in Microsoft Windows OS

- ERROR util.Shell: Failed to locate the winutils binary in the hadoop binary path

- Maven Build Failure - Hadoop 2.2.0 - [ERROR] class file for org.mortbay.component.AbstractLifeCycle not found

Comments